Machine learning takes materials modeling into new era

Deep learning approach enables accurate electronic structure calculations at large scales

The arrangement of electrons in matter, known as the electronic structure, plays a crucial role in fundamental but also applied research such as drug design and energy storage. However, the lack of a simulation technique that offers both high fidelity and scalability across different time and length scales has long been a roadblock for the progress of these technologies. Researchers from the Center for Advanced Systems Understanding (CASUS) at the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) in Görlitz, Germany, and Sandia National Laboratories in Albuquerque, New Mexico, USA, have now pioneered a machine learning-based simulation method (npj Computational Materials) that supersedes traditional electronic structure simulation techniques. Their Materials Learning Algorithms (MALA) software stack enables access to previously unattainable length scales.

Electrons are elementary particles of fundamental importance. Their quantum mechanical interactions with one another and with atomic nuclei give rise to a multitude of phenomena observed in chemistry and materials science. Understanding and controlling the electronic structure of matter provides insights into the reactivity of molecules, the structure and energy transport within planets, and the mechanisms of material failure.

Scientific challenges are increasingly being addressed through computational modeling and simulation, leveraging the capabilities of high-performance computing. However, a significant obstacle to achieving realistic simulations with quantum precision is the lack of a predictive modeling technique that combines high accuracy with scalability across different length and time scales. Classical atomistic simulation methods can handle large and complex systems, but their omission of quantum electronic structure restricts their applicability. Conversely, simulation methods which do not rely on assumptions such as empirical modeling and parameter fitting (first principles methods) provide high fidelity but are computationally demanding. For instance, density functional theory (DFT), a widely used first principles method, exhibits cubic scaling with system size, thus restricting its predictive capabilities to small scales.

Hybrid approach based on deep learning

The team of researchers now presented a novel simulation method called the Materials Learning Algorithms (MALA) software stack. In computer science, a software stack is a collection of algorithms and software components that are combined to create a software application for solving a particular problem. Lenz Fiedler, a Ph.D. student and key developer of MALA at CASUS, explains, "MALA integrates machine learning with physics-based approaches to predict the electronic structure of materials. It employs a hybrid approach, utilizing an established machine learning method called deep learning to accurately predict local quantities, complemented by physics algorithms for computing global quantities of interest."

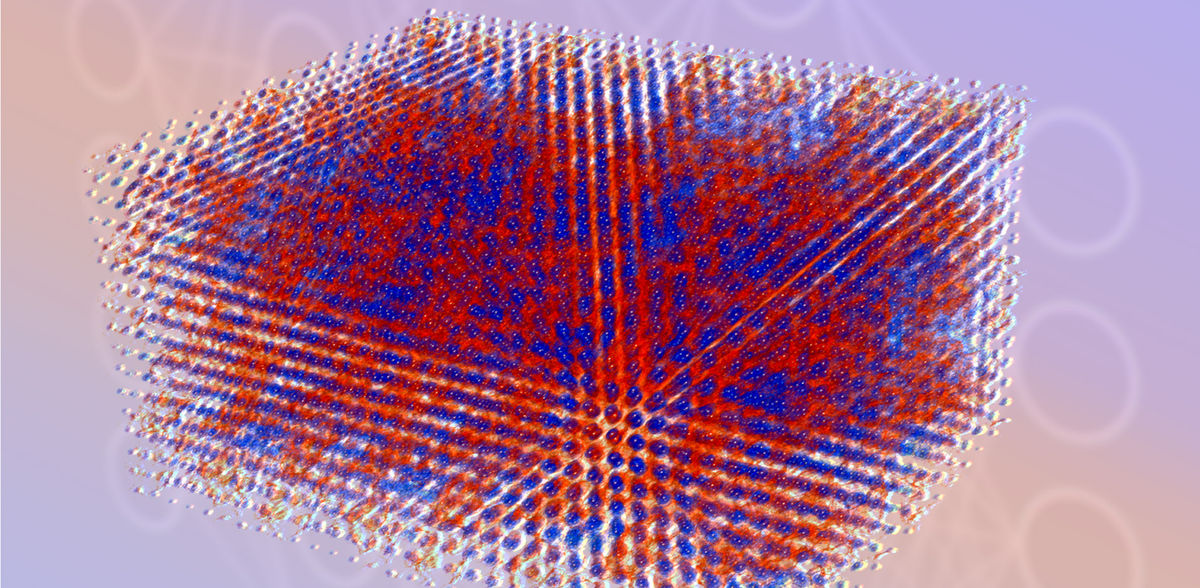

The MALA software stack takes the arrangement of atoms in space as input and generates fingerprints known as bispectrum components, which encode the spatial arrangement of atoms around a Cartesian grid point. The machine learning model in MALA is trained to predict the electronic structure based on this atomic neighborhood. A significant advantage of MALA is its machine learning model's ability to be independent of the system size, allowing it to be trained on data from small systems and deployed at any scale.

In their publication, the team of researchers showcased the remarkable effectiveness of this strategy. They achieved a speedup of over 1,000 times for smaller system sizes, consisting of up to a few thousand atoms, compared to conventional algorithms. Furthermore, the team demonstrated MALA's capability to accurately perform electronic structure calculations at a large scale, involving over 100,000 atoms. Notably, this accomplishment was achieved with modest computational effort, revealing the limitations of conventional DFT codes.

Attila Cangi, the Acting Department Head of Matter under Extreme Conditions at CASUS, explains: "As the system size increases and more atoms are involved, DFT calculations become impractical, whereas MALA's speed advantage continues to grow. The key breakthrough of MALA lies in its capability to operate on local atomic environments, enabling accurate numerical predictions that are minimally affected by system size. This groundbreaking achievement opens up computational possibilities that were once considered unattainable."

Boost for applied research expected

Cangi aims to push the boundaries of electronic structure calculations by leveraging machine learning: "We anticipate that MALA will spark a transformation in electronic structure calculations, as we now have a method to simulate significantly larger systems at an unprecedented speed. In the future, researchers will be able to address a broad range of societal challenges based on a significantly improved baseline, including developing new vaccines and novel materials for energy storage, conducting large-scale simulations of semiconductor devices, studying material defects, and exploring chemical reactions for converting the atmospheric greenhouse gas carbon dioxide into climate-friendly minerals."

Furthermore, MALA's approach is particularly suited for high-performance computing (HPC). As the system size grows, MALA enables independent processing on the computational grid it utilizes, effectively leveraging HPC resources, particularly graphical processing units. Siva Rajamanickam, a staff scientist and expert in parallel computing at the Sandia National Laboratories, explains, "MALA's algorithm for electronic structure calculations maps well to modern HPC systems with distributed accelerators. The capability to decompose work and execute in parallel different grid points across different accelerators makes MALA an ideal match for scalable machine learning on HPC resources, leading to unparalleled speed and efficiency in electronic structure calculations."

Apart from the developing partners HZDR and Sandia National Laboratories, MALA is already employed by institutions and companies such as the Georgia Institute of Technology, the North Carolina A&T State University, Sambanova Systems Inc., and Nvidia Corp.